October 2025 Data Streaming Launch: Adaptive Linking, Cloud Spanner Connector, and Orca with LangGraph

Modern data teams keep circling the same three priorities: keep streaming costs under control, connect more systems with less custom glue, and turn real-time data into intelligent actions. This month’s release focuses on all three. We’re introducing capabilities that make cross-cluster streaming more cost-efficient, add first-class connectivity for Google Cloud Spanner, and advance event-driven agents with LangGraph support in Orca.

UniLink Adaptive Linking

UniLink introduces Adaptive Linking with two modes—stateful and stateless—to match your migration strategy and rollout pace. The goal is to give teams precise control over offset semantics and rollout sequencing so migrations stop being all-or-nothing and start feeling like an engineering choice.

In stateful mode, UniLink preserves offsets across clusters. Consumers see a continuous stream, cutovers are clean, and rollback remains straightforward because source and destination positions align. To maintain that continuity, the final step requires all producers to switch from the old cluster to the new one in a coordinated window. Stateful is the right fit for strict auditability and environments where you must prove message order and position through a cutover.

In stateless mode, UniLink does not preserve offsets on the destination. That single decision dramatically relaxes producer rollout requirements. When some services need weeks or months to move, you can keep consumers on the destination and let producers migrate on their own schedule. Stateless shines in multi-team migrations, long-tail services, and pipelines built with processors that tolerate an offset change

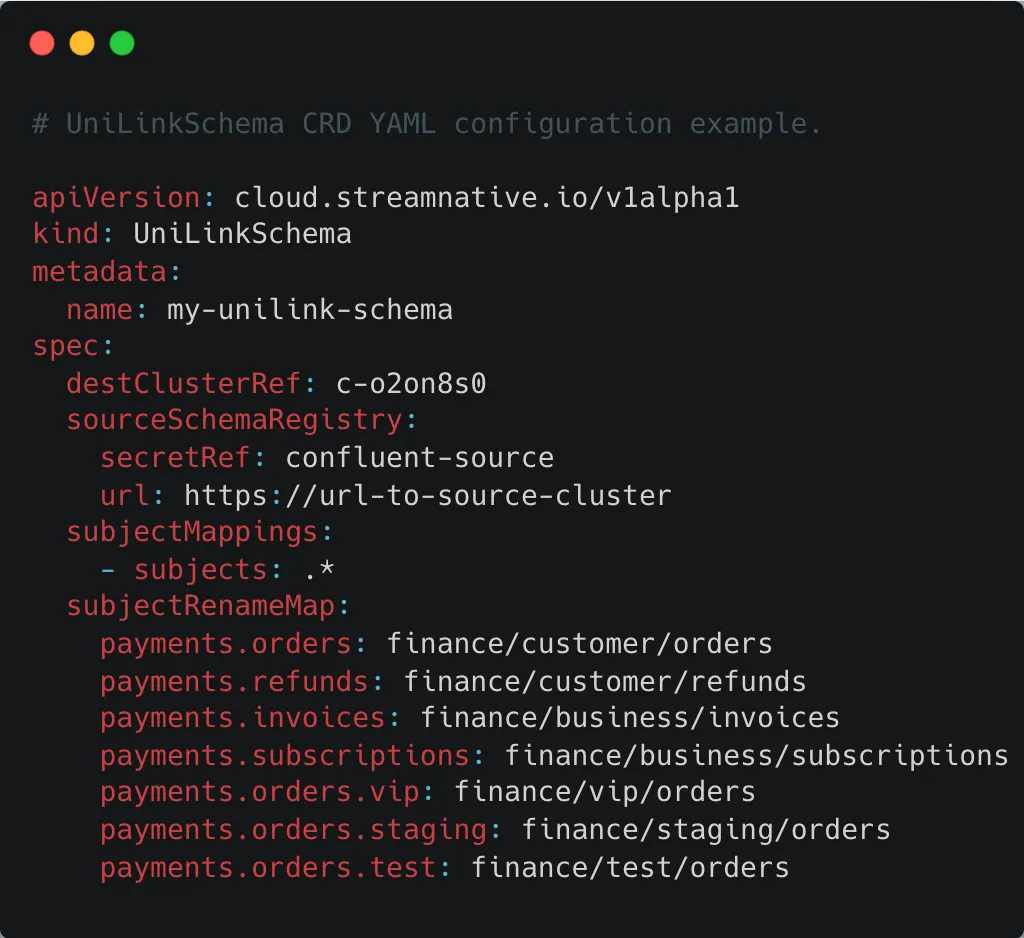

To make re-platforming smoother, UniLink now supports topic-rename mapping. You can mirror payments.orders into finance_orders, reorganize topics under different namespaces, or consolidate topics without breaking schemas or consumer-group behavior. Combined with mode selection, this lets you shape the migration to match your organization: coordinate tightly when you can, or stretch the rollout when you need to.

CTA: To try out Adaptive Linking, start with stateful links for consumer moves, then switch to stateless as you migrate producers and reorganize topics with topic-rename mapping.

Debezium Cloud Spanner Source: Streaming Spanner Changes, Managed

Connectivity expands this month with a Debezium Cloud Spanner Source connector. If Spanner powers your transactional workloads, you can now stream its change events into Kafka-compatible topics on StreamNative without custom pollers or batch jobs. The connector listens to Spanner change streams and emits per-row change events in near real time.

Because the connector is fully managed, setup is simple. Provide connection details, the project ID, Spanner instance ID, Spanner database ID, as well as the change stream name. Optionally, the user can also provide a start timestamp and an end timestamp; the platform handles scaling, partitioning, offset management, and delivery into your topics. Operations teams get the same observability they have for other connectors: throughput, lag, error rates, and retry visibility in one place.

This unlocks clear patterns. Microservices can subscribe to Spanner change topics to trigger workflows the moment a business event lands—order confirmations, fraud checks, fulfillment starts. Analytics teams can keep lakehouse tables fresh without nightly ETL, cutting latency from hours to minutes. Platform owners can centralize CDC across databases while keeping a single streaming backbone for transport, governance, and replay.

For developers already familiar with Debezium, the experience will feel natural: declarative configuration, a clear event model, and strong compatibility with the Kafka Connect ecosystem. For teams new to CDC, the value is turning operational state into event streams with a few clicks while the platform handles the heavy lifting.

CTA: In Console, add a Cloud Spanner Source and stream your first table to a Kafka-compatible topic in minutes.

Orca Agent Engine with LangGraph (Private Preview)

During the Data Streaming Summit last month, we announced the Orca Agent Engine in Private Preview; today we’re expanding that preview with native LangGraph support, alongside integrations with the Google Agent Development Kit (ADK) and the OpenAI Agent SDK.

The idea remains the same: if your core systems publish events, your AI should live where those events happen. Orca gives agents a durable, stream-native runtime—subscribe to topics, maintain memory, call tools and services, and emit new events—with concurrency, fault tolerance, and observability built in. LangGraph adds a structured way to design agent workflows: explicit multi-step reasoning, tool use, retries, and memory, expressed as graphs that Orca executes against live streams. Because every perception and action flows through the log, you get persistent context, a complete audit trail, and replay for debugging or compliance—no more ephemeral prompts or black-box decisions.

This is how event-driven agents move past prototypes. A monitoring agent can listen to security alerts, correlate with asset inventories, open tickets, and post runbooks when thresholds trip. A revenue operations agent can watch order and payment topics, reconcile anomalies against a system of record, and notify finance with the exact items to investigate. A developer productivity agent can observe CI events and test outcomes, file issues with the right context, and propose fixes based on known patterns. In each case, the agent is a long-lived service woven into your event fabric, not a one-off request.

Getting started is intentionally simple. Point a LangGraph agent at a single topic, let Orca handle scaling and backpressure, then grow into multi-topic workflows, shared tools, and cross-agent collaboration. ▶️ Watch the Demo

CTA: Request Orca Private Preview access and deploy your first LangGraph agent on live streams.

Putting It Together

Adaptive Linking removes migration timing risk. Start with stateful to move consumers first with offsets preserved; when you migrate producers, switch to stateless to relax rollout and finish at your pace. Topic-rename mapping lets you reorganize topics during the move instead of after. The Debezium Cloud Spanner Source turns Spanner changes into first-class streams, replacing nightly jobs and custom bridges with a managed connector that keeps downstream data fresh. Orca with LangGraph turns those streams into action: agents subscribe to events, keep state, call tools, and emit new events with full audit trails and replay.

CTA: Try different linking modes and topic-rename mapping for your next migration, configure the Cloud Spanner Source in Console, and request access to Orca (Private Preview) with LangGraph—Adaptive Linking and the Spanner connector are available now in StreamNative Cloud, and Orca with LangGraph is available through the preview program.

Newsletter

Our strategies and tactics delivered right to your inbox

.png)

.png)