Pulsar Newbie Guide for Kafka Engineers (Part 6): Schema Management in Pulsar

TL;DR

Pulsar has a built-in schema registry that travels with the cluster – no extra servers needed. Producers and consumers can define a schema (Avro, JSON, Protobuf, etc.), and Pulsar brokers ensure compatibility and store schema versions. We’ll cover how to use Pulsar’s schema management, compare it to Kafka’s external Schema Registry (like Confluent’s), and show CLI tools (pulsar-admin schemas) to upload or fetch schemas. Key point: Pulsar enforces schema compatibility at the broker if configured, preventing bad or incompatible data from being written, which is a powerful feature for data quality.

Schemas 101 in Pulsar

In Apache Kafka, schema management is typically handled outside of Kafka brokers – for example, using Confluent Schema Registry. Producers write a schema ID with data, and consumers retrieve the schema from the registry. Kafka itself is schema-agnostic (it treats messages as byte arrays).

Apache Pulsar, on the other hand, treats schema as a first-class concept:

- Pulsar messages are still bytes on the wire/storage, but you can associate a schema (structure) with a topic, and Pulsar will validate incoming messages against it.

- The broker stores schema definitions in a schema registry (backed by its metadata store / BookKeeper) and assigns each a version.

- When a producer or consumer connects with a schema, the broker can check compatibility with existing schema for that topic and either allow or reject the new schema if it’s incompatible (depending on your policy).

This is huge: it means you cannot accidentally write a message with the wrong schema (if schema enforcement is on). In Kafka, nothing stops a producer from writing gibberish or an unexpected format, aside from conventions.

By default, Pulsar’s schema registry supports Avro, JSON, Protobuf, and a few others out-of-the-box. You define schemas using these or provide your own POJOs (in Java, for example, Pulsar can derive an Avro schema from a class).

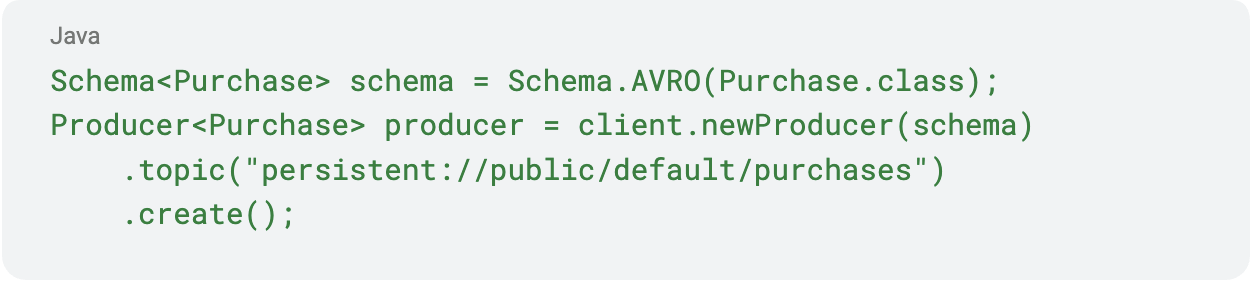

Example:

This will register the Avro schema of the Purchase class for the topic purchases if not already present. If a schema is already there, the broker will check compatibility. If it’s compatible (say you added a new optional field), it will add a new version; if it’s incompatible (breaking change), it can reject the producer’s attempt to connect.

Schema Compatibility

Similar to Confluent’s registry where you set backward/forward compatibility rules, Pulsar allows setting a compatibility strategy per namespace (or cluster). Options include:

AlwaysCompatible(no checks, anything goes),Backward(new schema can read data written with old schema),Forward(old readers can read new schema data),Full(both backward and forward),- etc.

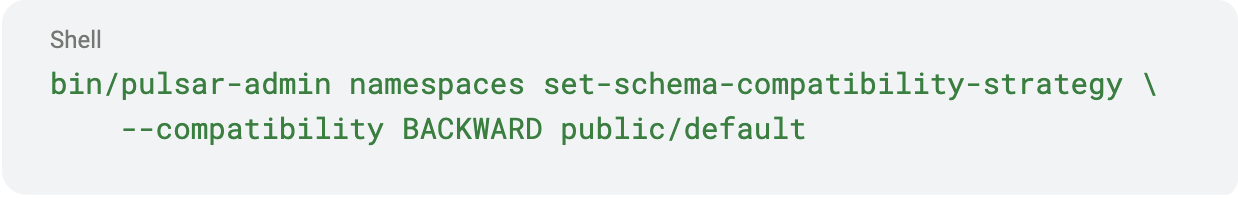

By default, Pulsar’s policy might be BACKWARD (depending on version). You can adjust it:

This means producers can evolve the schema (add fields, make them optional, etc.) as long as new consumers could still decode old messages (backward compatibility). If someone tries to remove a required field (breaking change), the broker will reject that new schema – the producer won’t be allowed to send data with that schema.

This broker-side enforcement is a strong safety net. Kafka’s approach is more “honor system” with Schema Registry; the brokers don’t know about schemas.

Schemas and Topics

Every topic can have at most one schema at a time (with multiple versions over time). If a producer without a schema connects to a topic that has a schema, what happens? By default, Pulsar will allow it if schema validation is not enforced, but it will treat their bytes as bytes schema. This can be dangerous (someone writing raw bytes to a structured topic), so Pulsar has a setting schemaValidationEnforced you can enable to require that producers use the topic’s schema. If enabled, a producer who doesn’t have a schema or has one that doesn’t match will be rejected.

For Kafka folks: this is like forcing all producers to go through Schema Registry and not allow schema-less writes – something you can’t do in Kafka natively.

Using the CLI for Schemas

Pulsar provides pulsar-admin schemas commands to manually manage schemas if needed:

- Upload a schema: If you want to pre-register a schema for a topic before producing, you can use

schemas upload. For example, you have an Avro schema fileMyType.avsc, you can do:

- This registers the schema (Schema type AVRO, schema definition in JSON inside the avsc). After this, any producer must conform to this schema.

- Get schema: You can retrieve the latest schema or a specific version:

- This will output the schema JSON, including type, definition, and version.

- Delete schema:

schemas delete topicif you want to remove it (topic becomes schema-less). Pulsar requires the topic to be unused to delete a schema, typically. - Schema versions: If you want an older version,

schemas get --version N topic.

These are akin to calls you’d make to a Schema Registry REST API in Kafka, but now it’s built-in.

Example scenario: Suppose you have a Kafka topic using Avro and Confluent Schema Registry. To migrate to Pulsar:

- You could take the Avro schema from Schema Registry (as an Avro schema JSON or .avsc) and use

pulsar-admin schemas uploadto register it on the Pulsar topic. - Then produce data using Pulsar’s Avro schema. Pulsar will tag messages with a schema version. Consumers can ask the broker for the schema if they don’t have it (the Java client does this automatically).

- Evolve the schema: if you change your Avro schema (say add a field), a Pulsar producer will send that as a new schema info. The broker will check compatibility; if okay, it will register that as a new version (v2) and allow it. Consumers that connect later will get the latest schema by default, and can also fetch older schema if needed.

Built-in vs External Schema Registry

Benefits of Pulsar’s approach:

- No separate service to maintain – the schema registry is part of Pulsar’s metadata.

- Automatic enforcement: less risk of bad data.

- Schema travels with topic data (conceptually): when a consumer connects and gets data, if it has the wrong schema version, the broker can supply the needed schema. This is similar to how Confluent clients fetch from the registry by ID, but in Pulsar it’s handled seamlessly by the broker.

- Supports schema on multi-tenancy: each tenant/namespace can have its own compatibility setting. Admins can enforce that all topics in a namespace use a certain compatibility mode, etc.

Considerations:

- If you don’t use schemas, Pulsar treats messages as just bytes (Schema.BYTES). That’s fine; you can still use Pulsar like Kafka without Schema Registry. But if you care about data formats, why not use the built-in feature?

- Schemas add a tiny overhead: a schema version is attached to messages. But it’s negligible (a small int).

- Pulsar’s Schema Registry is currently not as feature-rich as Confluent’s in terms of storing schema IDs for massive numbers of subjects or global compatibility across many topics. It’s more tightly coupled to topics. But for most uses, that’s exactly what you want.

- You might not have GUI tooling like Confluent’s UI for schema browsing (unless using StreamNative Console or something that surfaces it). However, CLI and REST API (yes, Pulsar has admin REST endpoints for schemas as well) are available.

Schema Evolution Example

Imagine you have a JSON schema (basically, a structured JSON expected). Pulsar can infer it or you can define it. You publish a few messages with schema v1. Now you need to add a field. If you set compatibility to FULL, you must add it in a backward-compatible way (e.g., make it optional or provide a default). You then update your consumer code and producer code to use the new schema. The producer sends the new schema upon the first message. The broker checks (okay, new field has default, it’s backward compatible) – good. It registers schema version 2.

Existing consumers that haven’t updated schema can actually continue – Pulsar will deliver data and can provide the old schema version if needed. But typically, if the consumer is using a structured API, it will need the new class to parse the new messages. This is similar to Kafka – you need to update consumers to handle new fields, or they’ll ignore unknown JSON fields perhaps.

One nice thing: Pulsar’s client libraries often auto-handle schema versioning. For Avro, if a consumer still has old schema, it might drop unknown fields but not crash. If using generic records, you could even access fields dynamically.

Multi-language and Schema

Pulsar supports schemas across Java, Python, Go, C++ etc. The client libraries can all fetch and decode using the stored schema info. For example, a Java producer could write Avro, and a Python consumer can consume by getting the Avro schema and using Avro library to decode.

Kafka in multi-lang with Avro often relies on everyone using Confluent’s wire format and each language having the Avro schema available. Pulsar simplifies that by making the broker the authority on schema.

Schema Enforcement Modes

As mentioned:

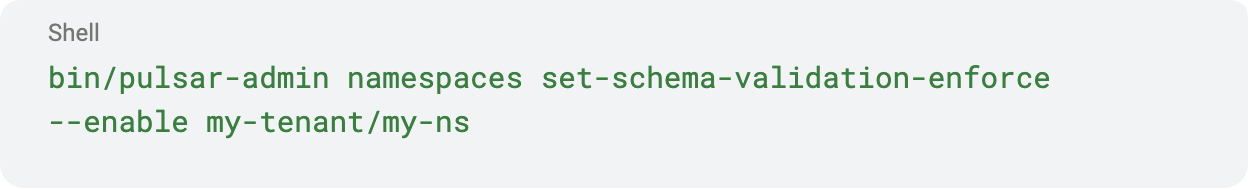

isAllowAutoUpdateSchema: When true (default), producers can auto-update schema if compatible. If false, the broker will not allow new schema versions via producers – you’d have to manually update via admin. In many cases, leaving it true is fine for agility.schemaValidationEnforced: When true, any producer without a matching schema is denied. For instance, if some rogue app tries to write raw bytes or a different schema, it’s blocked. Kafka has no such enforcement – if an app doesn’t use the registry, it can still write and cause problems down the line. Pulsar can prevent that scenario.

You can enable schema validation at broker startup (config) or namespace level:

CLI Walkthrough Example

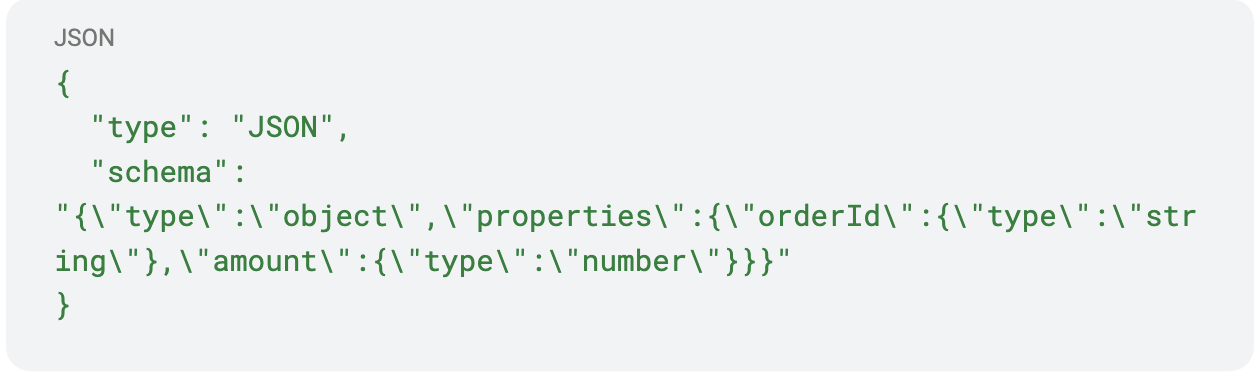

Let’s say we have a JSON schema for an Order:

(This is roughly how Pulsar stores JSON schema internally as JSON string.)

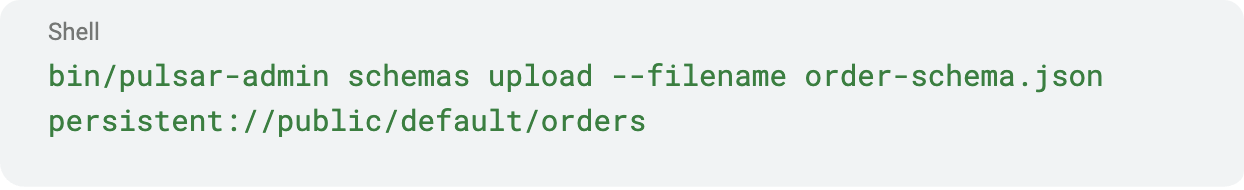

We can upload it:

This registers the schema (version 1). Now produce a message:

(Assuming -s could take a schema definition file to know how to serialize; in practice, you might write a small app or use pulsar-perf with a schema.)

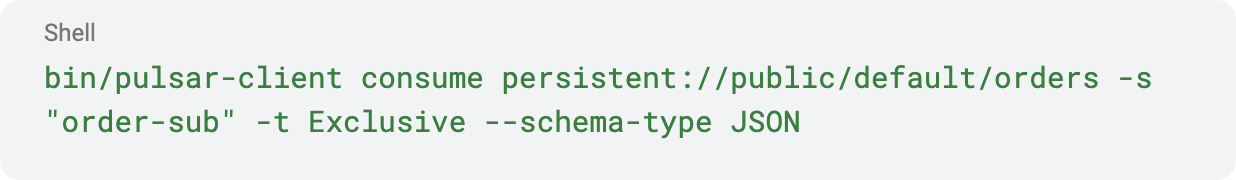

Now consumer:

This will receive messages and output them. The client automatically gets the schema from the broker (because we specified schema-type JSON, it knows to fetch the schema to decode into JSON).

If we change the schema (add a field customerName), we’d update the schema JSON and do an upload or let a producer auto-update it (if using an API, the producer would send the new schema on connect).

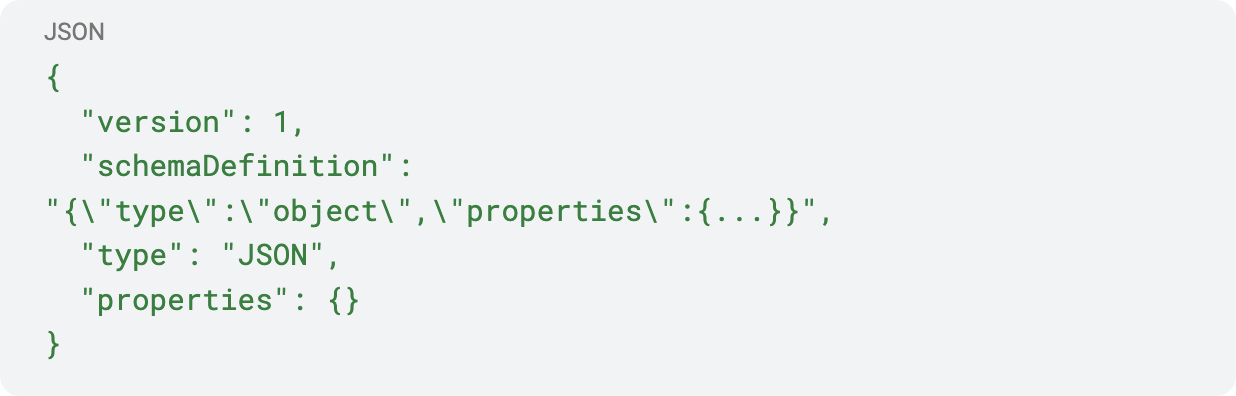

Using pulsar-admin schemas get persistent://public/default/orders we would see versions and definitions. It might output something like:

This confirms what’s stored.

Key Takeaways

- Pulsar’s integrated schema registry provides schema storage and enforcement without an external service. It ensures that producers and consumers agree on data format, improving reliability.

- Schema compatibility checks are done by brokers. You can configure strategies (BACKWARD, FORWARD, FULL, etc.) similar to Kafka’s Schema Registry rules. If a producer’s schema change is incompatible, the broker will refuse it – preventing bad data from ever being written.

- No separate schema IDs to manage in your app – the Pulsar client handles schema versioning. When a consumer receives a message, it can ask the broker for the schema at that version if it doesn’t have it, ensuring it can decode the message.

- CLI and Admin APIs allow manual schema operations: uploading a schema preemptively, deleting or fetching schemas. This is useful for governance or when migrating from an external schema store.

- Enabling schema validation enforcement (

schemaValidationEnforced) provides strong guarantees that all producers adhere to the declared schema. This is something Kafka cannot do natively – in Pulsar you can catch rogue producers at publish time. - For a Kafka engineer, using Pulsar schemas means a more streamlined architecture (one less component to run) and potentially safer schema evolution. It might require refactoring your producers/consumers to use Pulsar’s

SchemaAPI rather than raw byte producers, but the benefits in data quality can be worth it.

In the next section, Part 7, we’ll look at Pulsar Security for Kafka Admins – covering authentication/authorization and how Pulsar’s multi-tenant security compares to Kafka’s ACLs and SASL.

-----------------------------------------------------------------------------------------------------------

Want to go deeper into real-time data and streaming architectures? Join us at the Data Streaming Summit San Francisco 2025 on September 29–30 at the Grand Hyatt at SFO.

30+ sessions | 4 tracks | Real-world insights from OpenAI, Netflix, LinkedIn, Paypal, Uber, AWS, Google, Motorq, Databricks, Ververica, Confluent & more!

Newsletter

Our strategies and tactics delivered right to your inbox

.png)

.png)