The Event‑Driven Agent Era: Why Streams Matter Now (Event-Driven Agents, Part 1)

Introduction

AI has evolved through distinct waves — from early predictive models to the recent generative AI boom — and now into the era of agentic AI, where autonomous agents can act, adapt, and collaborate in real time. In this new phase, simply having smart models isn’t enough; the way these AI agents communicate and act upon incoming data becomes critical. This post kicks off our series by exploring why event-driven data streams are the key to unlocking the full potential of AI agents in this real-time, event-driven era. We’ll discuss the limitations of traditional architectures and how streaming-centric designs address those challenges, enabling more scalable and responsive AI systems.

The Event-Driven Agent Era at a Glance

In the agentic AI era, software “agents” don’t just generate content or predictions — they have the agency to make decisions and take actions autonomously. Think of an AI agent as a specialized microservice with a brain: it perceives incoming data, reasons over state, and initiates actions (including communicating with other agents). However, to be truly useful, such agents require more than just clever models and tools. They need a robust infrastructure to support real-time data flow, security to protect and govern sensitive information, and scalability to operate in concert across an organization. In other words, the underlying architecture must empower agents to work together timely and seamlessly, not in isolation.

Traditional request-response architectures (e.g. REST APIs, synchronous workflows) are ill-suited for this new breed of AI. Rigid, query-driven designs can’t keep up with dynamic, continuous streams of events. In a request-driven system, each agent or service must explicitly poll or call others to get updates, introducing delays and tight coupling. Such architectures quickly become bottlenecks that limit scalability and make coordination between multiple agents a nightmare. Agents might be starved of fresh data or spend too much time waiting on each other. Clearly, the old approaches break down when faced with the speed and complexity of modern AI applications.

What today’s AI agents demand is the ability to react asynchronously and in real time to an ever-changing environment. This calls for embracing an Event-Driven Architecture (EDA) powered by a streaming platform. In an event-driven system, the mindset flips: instead of agents making direct calls for data, they subscribe to relevant data streams and publish events when they have new information or outcomes. Agents become producers and consumers of events, all flowing through a central event hub or bus. This way, each agent is always working with the latest data and can react the moment something important happens, without being explicitly invoked by others. As we’ll see, this shift to streaming events brings major benefits for building AI that is robust, scalable, flexible and responsive.

Why Traditional Architectures Fall Short

Let’s examine the shortcomings of the traditional approach through a familiar analogy: microservices. Early on, distributed systems often involved tightly-coupled services making synchronous calls to each other. This led to complex webs of dependencies — if one service slowed down or failed, it directly impacted others. Similarly, a naive implementation of multiple AI agents might have them calling each other’s APIs or writing to the same databases, resulting in tangled interactions and fragile systems. In fact, deploying hundreds of independent AI agents without a structured communication framework would result in chaos: agents could become fragmented, inefficient, and unreliable just like uncoordinated microservices.

Microservice architecture evolved to solve these issues by introducing asynchronous, event-driven communication. Instead of every service talking to every other service directly, events are exchanged via a message broker or streaming platform. This decouples the senders and receivers — services simply emit events and any interested parties consume them. The breakthrough came with event-driven architectures where services react to changes asynchronously, enabling real-time responsiveness and better scalability. AI agents today need the same kind of shift in how they interact.

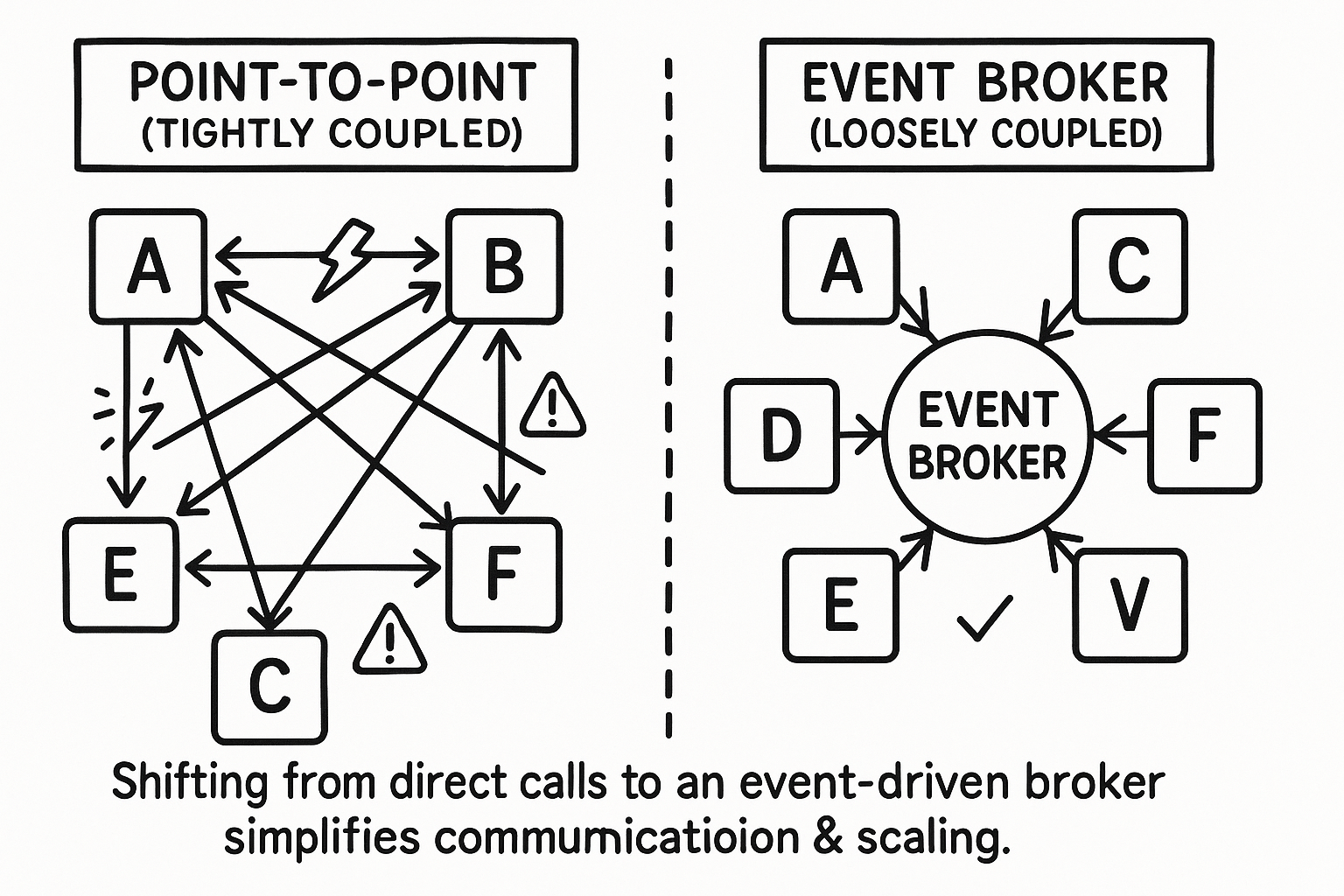

Figure 1: Shifting from tightly coupled point-to-point interactions (left) to an event broker model (right) simplifies communication and scaling for microservices.

The diagram above illustrates how a set of services (or agents) can be coordinated via an event broker. On the left, each microservice must know about and directly communicate with others, forming a brittle network. On the right, each one simply emits events and listens for events on the broker, decoupling their logic. For AI agents, this means they don’t need hard-coded knowledge of each other. An agent can announce, “I have new data” or “an anomaly occurred” as an event, and any other agent interested in that type of event can respond accordingly. This architecture drastically reduces interdependencies and avoids the spaghetti mess of direct integrations. It also provides a natural buffer and fault tolerance: if an agent goes down, the event stream can persist the data until it comes back or a replacement picks up, instead of causing a cascade of failures.

Another limitation of traditional designs is the reliance on static data or batch processing. Many AI workflows historically operated on stale data snapshots — e.g. daily batch updates or periodic database queries. For an autonomous agent making decisions, stale data can be disastrous (imagine a trading agent acting on last week’s prices!). Request/response systems can’t easily push updates to agents in real time; at best, agents would have to constantly poll for changes, which doesn’t scale and still introduces latency. By contrast, event streaming ensures that whenever new data is available, it gets delivered to subscribers immediately. There are no artificial delays waiting for the next batch or API call – agents are always working with live feeds. As a result, they can respond to opportunities and risks instantaneously and accurately.

Why Streams Matter for AI Agents

Streaming data platforms and event-driven architecture directly address the challenges above, providing a foundation for real-time, scalable intelligence. Here are some of the key benefits of adopting streams in an AI agent system:

- Real-Time Data Access: Agents receive data as continuous streams of events, eliminating batch delays. This ensures decisions are made on the freshest possible information, not outdated snapshots. Anomaly detectors, for example, can catch issues within seconds of occurrence, because they don’t have to wait for a scheduled job or request to pull data.

- Loose Coupling of Agents: Agents communicate through the event bus rather than direct one-to-one calls. This decoupled architecture reduces complexity and interdependence between agents. Each agent simply declares the types of events it produces and consumes. The benefit is easier integration of new agents and the ability to change or scale parts of the system without breaking everything else.

- Scalability and Flexibility: Because agents process events asynchronously, you can scale out the number of agents or instances of an agent type seamlessly. New agents can join the system without disrupting existing workflows. Workloads naturally distribute via the streaming platform (much like how consumer groups work in Kafka or Pulsar), enabling the system to handle spikes in events or additional tasks by just adding consumers. This architecture is future-proof in that you can plug in any model or tool as an agent, and as long as it adheres to the event interfaces, it participates in the ecosystem without special coordination code.

- Fresh, AI-Ready Data Streams: An event-driven platform can preprocess and transform data on the fly, preparing it for AI consumption. For instance, streaming pipelines might convert raw text into vector embeddings in real time and publish those embeddings as events. Agents subscribing to these enriched event streams get the benefit of up-to-date features and context (e.g. updated user profiles, live sensor readings) without needing to batch-retrain or constantly query databases. The streaming layer effectively feeds intelligent agents with a constant flow of relevant, structured information, which is crucial for techniques like real-time recommendation or reinforcement learning.

- Resilience and Fault Tolerance: The event log acts as a durable buffer. If an agent goes down momentarily, it can replay missed events from the stream once it recovers, ensuring no data or trigger is lost. This is far more robust than synchronous RPC calls which might fail or time out. The decoupling also localizes failures — one stalled agent doesn’t directly block others, as long as the event stream is flowing. Overall system reliability increases, which is important as you scale to dozens or hundreds of agents.

- Multiplexing and Replay: A streaming platform enables an upstream agent to publish results to a topic once, while multiple downstream agents can independently subscribe to that topic to consume events in real time. This pattern eliminates the need for direct integrations, ensuring communication remains flexible, decoupled, and highly scalable. New downstream agents can be introduced seamlessly without requiring changes to upstream logic. In addition, the ability to replay or rewind past events is critical for scenarios such as debugging, retraining models, or restoring state after failures. Rather than losing historical context, agents can reprocess prior events deterministically to achieve consistent outcomes. By combining multiplexed delivery with reliable replay, streaming platforms provide the foundation for resilient, scalable, and intelligent agent ecosystems.

Beyond these benefits, an event-driven approach inherently supports better governance and observability. All events flowing through a central platform can be logged, monitored, and even audited in real time. This is a big advantage for organizations concerned with compliance, security, or just debugging complex agent behaviors. A streaming platform can enforce data quality checks and security policies on the events, ensuring that agents only operate on trustworthy data. Traditional point-to-point integrations struggle to offer such a unified view and control. By using a centralized streaming backbone, you gain a “single source of truth” for what each agent did and why, since their inputs and outputs are all events on the bus.

Finally, streams enable what is called a “shift-left” approach – moving computation and decision-making closer to the data source. Instead of waiting for data to be stored and then processed, the processing happens in motion. This reduces latency dramatically. In practice, that means an AI agent can trigger actions in near real time as events arrive, which is critical for use cases like fraud detection, IoT automation, or personalized user experiences. The faster an agent can react to an event, the more value it can provide.

Conclusion

The emergence of event-driven streaming architecture marks a turning point for AI systems. We no longer have to bolt intelligent agents onto brittle, request-driven frameworks and hope for the best. By making events the lingua franca of our AI agents, we enable them to truly operate in real time and at scale. Streams provide a live data fabric that keeps agents in sync and informed, while avoiding the pitfalls of tight coupling and stale data. In short, streams matter now because they transform isolated AI capabilities into a coordinated, adaptive system of agents. Organizations that embrace this streaming-first approach will be positioned to build smarter, more responsive and better scalable AI solutions that can evolve with the ever-increasing pace of AI revolution.

This is just the beginning of our exploration. In upcoming posts, we will dive deeper into how to design and implement event-driven agent systems — from the anatomy of an AI agent, to multi-agent design patterns, to real-world architecture examples. We’ll illustrate how an enterprise-grade streaming platform (using platforms like StreamNative Cloud) forms the backbone of the agentic AI stack, and how you can start building your own event-driven agents.

Next Step-Get Involved

To learn more and see these concepts in action, be sure to join us at the Data Streaming Summit on September 30, 2025. It’s a great opportunity to hear from experts and architects who are building real-time AI systems (and yes, agentic AI will be a hot topic!).

Newsletter

Our strategies and tactics delivered right to your inbox

.png)

.png)